Bruhnpace AB is engaged in machine learning and artificial intelligence research, development and product applications. Bruhnspace, in collaboration with Unibap AB and Mälardalen University are pursuing mission critical, low-latency data processing on embedded systems. This project page is devoted to AMD‘s Accelerated Processing Units (APU) families of products and support of the AMD professional compute library ROCm.

Are You Ready to ROCK on AMD APUs?

AMD ROCm is a powerful foundation for advanced computing by seamlessly leveraging CPU and GPU. Unfortunately AMD does not provide support for APUs in the official ROCm packages. Bruhnspace provide experimental packages of ROCm with APU support for research purposes.

Bruhnspace Public APT repository available

You can now use our apt repository to install ROCm for APUs on Ubuntu. Please see further information at Bruhnspace APT repository.

ROCm 3.10.0 update – core packages available for 20.04 LTS (focal)

Our team has now built ROCm 3.10.0 core packages with APU (gfx902) support for Ubuntu 20.04 LTS (focal) which are availble at our APT repository.

These packages are build using Docker and should work with any standard 20.04 installation.

ROCm 3.3.0 update

Our team has now verified that ROCm 3.3.0 can also run on APUs. It required a thorough run-down of all patches but work with TensorFlow 2.2, 2.1, 1.15.2, and pyTorch 1.6a (which includes RCCL now and no longer require a build dependency on NCCL). Using cmake 3.16 and protobuf 3.11.4.

HCC clang version 11.0.0 (https://github.com/RadeonOpenCompute/llvm-project e8173c23afae5686ccb3bf92ccc8f16c7f47023f) (based on HCC 3.1.20114-6776c83f-e8173c23afa ) HIP : 3.3.20126 rocBLAS : 2.18.0 rocFFT : 1.0.2 rocRAND : 2.10.0 hipRAND : 2.10.0 rocSPARSE : 1.8.9 hipCUB : 2.10.0 rocPRIM : 2.10.0 MIOpen : 2.3.0 hipBLAS : 0.24.0 hipSPARSE : 1.5.4 rocALUTION: 1.8.1 $ python3 -c 'import tensorflow as tf; print(tf.version)' 2.2.0 $ python3 -c "from tensorflow.python.client import device_lib;print(device_lib.list_local_devices())" :I tensorflow/core/platform/profile_utils/cpu_utils.cc:102] CPU Frequency: 1996170000 Hz : I tensorflow/compiler/xla/service/service.cc:168] XLA service 0x54caeb0 initialized for platform Host (this does not guarantee that XLA will be used). Devices: : I tensorflow/compiler/xla/service/service.cc:176] StreamExecutor device (0): Host, Default Version : I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libhip_hcc.so : I tensorflow/compiler/xla/service/service.cc:168] XLA service 0x556d980 initialized for platform ROCM (this does not guarantee that XLA will be used). Devices: : I tensorflow/compiler/xla/service/service.cc:176] StreamExecutor device (0): AMD Ryzen Embedded V1605B with Radeon Vega Gfx, AMDGPU ISA version: gfx902 : I tensorflow/core/common_runtime/gpu/gpu_device.cc:1579] Found device 0 with properties: pciBusID: 0000:03:00.0 name: AMD Ryzen Embedded V1605B with Radeon Vega Gfx ROCm AMD GPU ISA: gfx902 coreClock: 1.1GHz coreCount: 11 deviceMemorySize: 16.00GiB deviceMemoryBandwidth: -1B/s : I tensorflow/core/common_runtime/gpu/gpu_device.cc:1247] Created TensorFlow device (/device:GPU:0 with 15159 MB memory) -> physical GPU (device: 0, name: AMD Ryzen Embedded V1605B with Radeon Vega Gfx, pci bus id: 0000:03:00.0)

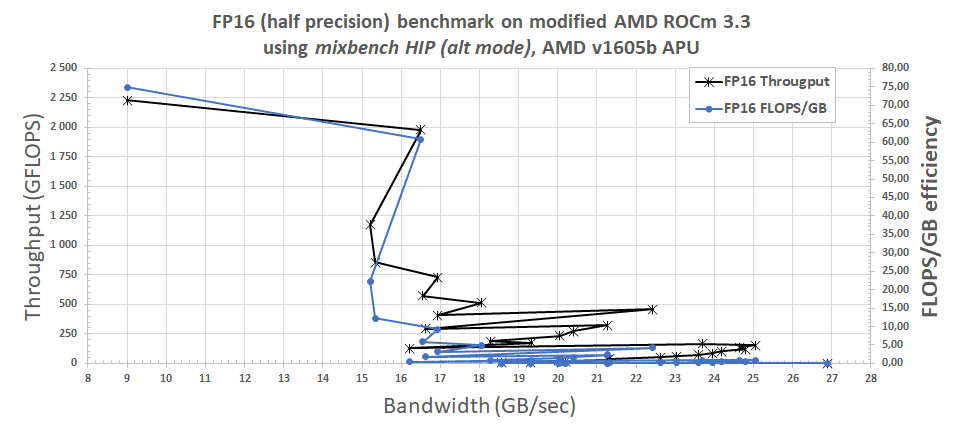

A significant amount of benchmarking and evaluation has been performed. An example from the testing campaign is shown here. The benchmark Mixbench, https://github.com/ekondis/mixbench.git was used, with modified Makefile script,

$ nano Makefile CUDA_INSTALL_PATH = /usr/local/cuda OCL_INSTALL_PATH = /opt/rocm/opencl $ HIP_PATH=/opt/rocm/hip make -j8 $ ./mixbench-hip-alt

The benchmark clearly show different behavior for different loads. A maximum throughput of 2.2 TFLOP is reach at 9 GB/s bandwidth and a good 2 TFLOP throughput at 16.5 GB/s bandwidth.

Explore ROCm on APU further by experience ROCm 2.6 for APUs per instructions below for Ubuntu 18.04.

GCC / GLIBC ABI update

Please note that these libraries are built against libstd++.6.0.26 and not the default Ubuntu 18.04.3 package. Follow the Newbee instructions below and then perform this to update the gcc libraries. You can upgrade the installation by doing this:

$ sudo add-apt-repository ppa:ubuntu-toolchain-r/test $ sudo apt update $ sudo apt upgrade You need to see the following for the rocm-apu packages to work: $ ll /usr/lib/x86_64-linux-gnu/libstdc++.so.6 lrwxrwxrwx 1 root root 19 may 21 13:37 /usr/lib/x86_64-linux-gnu/libstdc++.so.6 -> libstdc++.so.6.0.26

Download ROCm for APU devices

Please see our ROCm-apu file repository to download patched installation packages to support AMD APUs. Currently Ubuntu 18.04.3 or newer point release with hardware enablement stack installed is supported. The supported APU families are the following:

- gfx801, Carrizo, Bristol Ridge, G-Series SOC, R-Series SOC.

- gfx902, Raven Ridge (Ryzen), V-Series, V1000, R-Series 1000.

Download ROCm debian files from our rocm-apu file repository.

Machine Learning

TensorFlow on AMD APU

Bruhnspace provides experimental builds of TensorFlow for AMD APUs. You can find installation wheels for TF v1.14.1 and TF v2.0.0 for:

- TF v1.14.1 & v2.0.0 for Carrizo (gfx801) family, R-Series, G-Series etc. tested on AMD A10-8700P Radeon R6

- TF v1.14.1 & v2.0.0 for Raven (gfx902) family, V1000 series, etc tested on AMD Ryzen Embedded V1605B with Radeon Vega Gfx

Download TensorFlow installation wheels from our rocm-apu file repository. A log file of a simple test is provided as well to show what the output should look like.

pyTorch

Bruhnspace provides an experimental build of pyTorch for AMD APUs. You can find the installation wheel for pyTorch v1.3.0 supporting:

- Carrizo (gfx801) family, R-series, G-Series etc tested on AMD A10-8700P

- Raven (gfx902) family, V1000 Series, R-Series.

Download pyTorch install wheel from our rocm-apu file repository. A log file for simple testing and installation instructions are included.

PlaidML

PlaidML is working out of the box with ROCm for APUs (OpenCL support for APUs is enabled in the AMD ROCm packages). Remember to use python3 and follow the regular instructions for virtual environment.

Radeontop GPU tool upgrade

Ubuntu 18.04 is shipping with an old radeontop package that is missing Raven (gfx902) support. Download a newer (v1.2) installation file from rocm-apu file repository.

Newbie instructions

To get rockin’ with our ROCm APU packages you only have to perform a few things. Let’s assume that you have a compatible system in your hands and that you have a working setup. The working setup part however has been a real pain over the Heterogeneous System Architecture (HSA) bring-up with lots of broken systems due to erroneous BIOS implementations (missing CRAT, IVRS, IVHD table configurations etc.). A lot of investigations have been made to identify working configurations. Here is a summary of the most critical items.

DO NOT USE THE AMD PROVIDED rocm-dkms PACKAGE. THE APUS ARE ONLY SUPPORT IN THE UPSTREAM LINUX KERNEL DRIVER. MAKE GOOD NOTE OF MINIMAL KERNEL VERSIONS. Our rocm-dkms package has the kernel driver removed so the linux provided kernel driver get selected.

A minimum requirement (in addition to a correct BIOS) to get ROCm working on APUs is to strictly adhere to linux kernel versions according to the following:

- AMD Carrizo/Bristol Ridge requires Linux Kernel 4.18 or newer

- AMD Raven Ridge requires Linux Kernel 4.19 or newer

- AMD upcoming Renoir requires Linux Kernel 5.4 or newer

Begin with a clean 18.04.3 AMD64 or newer distribution. Then install the hardware enablement if not selected during installation. If you intend to also do development, install the dependencies,

We suggest that Ubuntu 18.04.3 is used with hardware enablement until 2023 which brings Linux Kernel 5.0. All testing done on the experimental packages is using 5.0. However, we have used 5.1, and newer as well.

sudo apt install --install-recommends linux-generic-hwe-18.04 xserver-xorg-hwe-18.04 sudo apt install libnuma-dev git cmake build-essential pkg-config libpci-dev lsb-core rpm \ libelf-dev patch python ocaml ocaml-findlib git-svn curl libgl1-mesa-dev \ doxygen dpkg make cmake-curses-gui python2.7 python-yaml gfortran libboost-program-options-dev \ libxml2-dev coreutils g++-multilib gcc-multilib findutils libelf1 libpci3 file \ systemtap-sdt-dev libfftw3-dev libboost-filesystem-dev libboost-system-dev libssl-dev wget \ graphviz texlive-full

You must also upgrade Google Test, CMake (> 3.12), and z3. If you want to hipify CUDA code, you must also install CUDA Toolkit 10.1.

Install our rocm with apu support deb files and get rockin.

License and support

ABSOLUTELY NO WARRANTY IS GIVEN. THIS IS FOR DEVELOPMENT AND TEST ONLY. AMD MAKE NO GUARANTEES THAT APU SUPPORT WILL WORK ALTHOUGH TESTING MAY SHOW THAT IT DOES. SORRY FOLKS. YOU’RE ON YOUR OWN PLAYING WITH THIS.

F.A.Q. section

Q: I am looking for the patches to enable ROCm on APUs. Can you share them?

A: Unfortunately not. Bruhnspace cannot share the patches.

Q: How do I know if my system has proper amdgpu/kfd support for rocm?

A: There are several things that must work, please check the basics by doing the following after booting on the Ubuntu 18.04 hwe 5.0 kernel (this is an example from a Carrizo Acer Aspire E5 laptop):

~$ dmesg | grep kfd [ 3.914232] kfd kfd: Allocated 3969056 bytes on gart [ 3.914432] kfd kfd: added device 1002:9874 // Added Carrizo OK ~$ dmesg | grep iommu [ 0.917619] pci 0000:00:01.0: Adding to iommu group 0 [ 0.917682] pci 0000:00:01.0: Using iommu direct mapping [ 0.917713] pci 0000:00:01.1: Adding to iommu group 0 [ 0.917765] pci 0000:00:02.0: Adding to iommu group 1 [ 0.917785] pci 0000:00:02.2: Adding to iommu group 1 [ 0.917801] pci 0000:00:02.3: Adding to iommu group 1 [ 0.917872] pci 0000:00:03.0: Adding to iommu group 2 [ 0.917889] pci 0000:00:03.1: Adding to iommu group 2 [ 0.917964] pci 0000:00:08.0: Adding to iommu group 3 [ 0.918039] pci 0000:00:09.0: Adding to iommu group 4 [ 0.918054] pci 0000:00:09.2: Adding to iommu group 4 [ 0.918123] pci 0000:00:10.0: Adding to iommu group 5 [ 0.918189] pci 0000:00:11.0: Adding to iommu group 6 [ 0.918263] pci 0000:00:12.0: Adding to iommu group 7 [ 0.918342] pci 0000:00:14.0: Adding to iommu group 8 [ 0.918358] pci 0000:00:14.3: Adding to iommu group 8 [ 0.918450] pci 0000:00:18.0: Adding to iommu group 9 [ 0.918467] pci 0000:00:18.1: Adding to iommu group 9 [ 0.918484] pci 0000:00:18.2: Adding to iommu group 9 [ 0.918500] pci 0000:00:18.3: Adding to iommu group 9 [ 0.918516] pci 0000:00:18.4: Adding to iommu group 9 [ 0.918532] pci 0000:00:18.5: Adding to iommu group 9 [ 0.918548] pci 0000:01:00.0: Adding to iommu group 1 [ 0.918609] pci 0000:02:00.0: Adding to iommu group 1 [ 0.922096] perf/amd_iommu: Detected AMD IOMMU #0 (2 banks, 4 counters/bank). ~$ dmesg | grep drm [ 3.301528] [drm] amdgpu kernel modesetting enabled. [ 3.303933] fb0: switching to amdgpudrmfb from VESA VGA [ 3.314007] [drm] initializing kernel modesetting (CARRIZO 0x1002:0x9874 0x1025:0x095E 0xC5). [ 3.314378] [drm] register mmio base: 0xF0D00000 [ 3.314379] [drm] register mmio size: 262144 [ 3.314382] [drm] add ip block number 0 [ 3.314383] [drm] add ip block number 1 [ 3.314384] [drm] add ip block number 2 [ 3.314385] [drm] add ip block number 3 [ 3.314386] [drm] add ip block number 4 [ 3.314388] [drm] add ip block number 5 [ 3.314389] [drm] add ip block number 6 [ 3.314390] [drm] add ip block number 7 [ 3.314391] [drm] add ip block number 8 [ 3.314392] [drm] add ip block number 9 [ 3.314410] [drm] UVD is enabled in physical mode [ 3.314413] [drm] VCE enabled in physical mode [ 3.342457] [drm] BIOS signature incorrect 0 0 [ 3.346801] [drm] vm size is 64 GB, 2 levels, block size is 10-bit, fragment size is 9-bit [ 3.346835] [drm] Detected VRAM RAM=512M, BAR=512M [ 3.346837] [drm] RAM width 64bits UNKNOWN [ 3.351627] [drm] amdgpu: 512M of VRAM memory ready [ 3.351629] [drm] amdgpu: 3072M of GTT memory ready. [ 3.351670] [drm] GART: num cpu pages 262144, num gpu pages 262144 [ 3.351717] [drm] PCIE GART of 1024M enabled (table at 0x000000F4007E9000). [ 3.511353] [drm] Found UVD firmware Version: 1.87 Family ID: 11 [ 3.511371] [drm] UVD ENC is disabled [ 3.562978] [drm] Found VCE firmware Version: 52.4 Binary ID: 3 [ 3.602977] [drm] Display Core initialized with v3.2.17! [ 3.651956] [drm] SADs count is: -2, don't need to read it [ 3.671381] [drm] Supports vblank timestamp caching Rev 2 (21.10.2013). [ 3.671383] [drm] Driver supports precise vblank timestamp query. [ 3.711213] [drm] UVD initialized successfully. [ 3.910716] [drm] VCE initialized successfully. [ 3.922937] [drm] fb mappable at 0x1FFDCC000 [ 3.922938] [drm] vram apper at 0x1FF000000 [ 3.922939] [drm] size 8294400 [ 3.922940] [drm] fb depth is 24 [ 3.922941] [drm] pitch is 7680 [ 3.923072] fbcon: amdgpudrmfb (fb0) is primary device [ 3.930413] amdgpu 0000:00:01.0: fb0: amdgpudrmfb frame buffer device [ 3.972224] [drm] Initialized amdgpu 3.30.0 20150101 for 0000:00:01.0 on minor 0 This system is likely to work with ROCm APU.

Credits to AMD corporation and important staffers supporting our endless questions.

Less credits for promising APU support for a long time and not delivering it even though the support is for the most part in the ROCm software stack already.